We’re currently in the process of refreshing a client’s VI3 environment to vSphere 4.1 and procured a new server to add to the existing cluster. While performing the ESXi install on the new server, I did not have a ESXi 4.1 CD available so I went ahead to install 4.0 and figure I’d update it with the vSphere Host Update Utility. For those who have read the vSphere 4.1 release probably already know that you cannot update the host from 4.0 to 4.1 with that utility so this post serves as to show how you can update the host with the vSphere CLI.

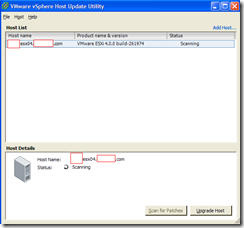

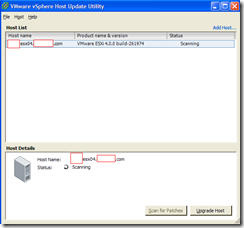

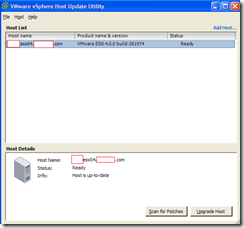

I started off with using the VMware vSphere Host Update Utility I had installed on my laptop to try and update the ESXi 4.0.0 build-261974.

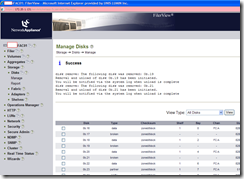

As shown in the following screenshot, scanning a fully patched ESXi 4.0.0 won’t give you an option to upgrade the host to version 4.1.0.

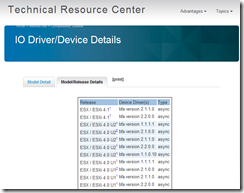

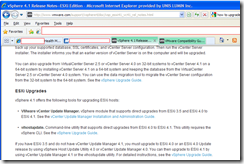

As per the following release notes:

http://www.vmware.com/support/vsphere4/doc/vsp_esxi41_vc41_rel_notes.html

ESXi Upgrades

vSphere 4.1 offers the following tools for upgrading ESXi hosts:

VMware vCenter Update Manager. vSphere module that supports direct upgrades from ESXi 3.5 and ESXi 4.0 to ESXi 4.1. See the vCenter Update Manager Installation and Administration Guide.

vihostupdate. Command-line utility that supports direct upgrades from ESXi 4.0 to ESXi 4.1. This utility requires the vSphere CLI. See the vSphere Upgrade Guide.

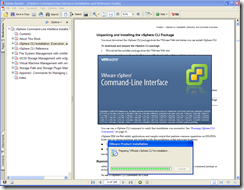

Reviewing the upgrade guide at: http://www.vmware.com/pdf/vsphere4/r41/vsp_41_upgrade_guide.pdf shows that we need to download and install the vSphere CLI client.

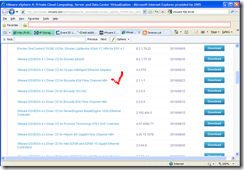

So I went ahead to download the client from http://www.vmware.com/downloads:

(The build I downloaded for this upgrade was: VMware-vSphere-CLI-4.1.0-254719.exe)

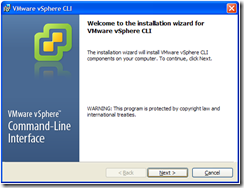

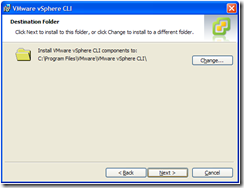

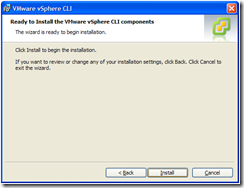

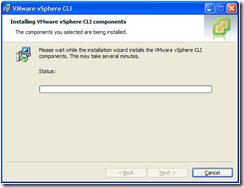

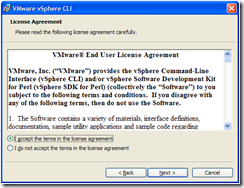

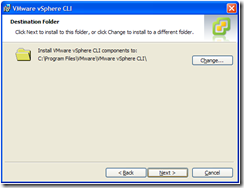

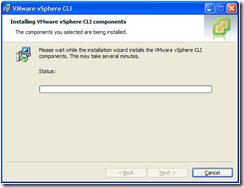

… and began installing it:

The following screen too extremely long to finish and I remember not having this issue on my last deployment when I installed it on a server so my guess is that I had some other application on my laptop that caused the delay.

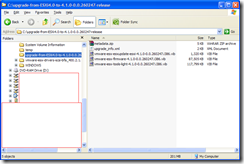

Once I completed the installation, I went ahead to download the upgrade package. Make sure you download the proper upgrade package in a ZIP package and not the regular installable ISO as the latter will not allow you to use vSphere CLI to upgrade the host.

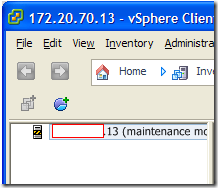

While downloading the package, we can spend the time we need to wait to put the host into maintenance mode:

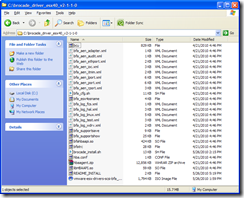

Once you’ve downloaded the zip package, DO NOT uncompress it. Simply place it into a directory of your choice and then open up the VMware vSphere CLI.

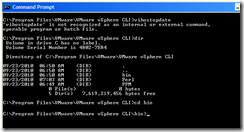

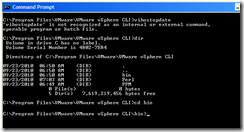

C:\Program Files\VMware\VMware vSphere CLI>vihostupdate

'vihostupdate' is not recognized as an internal or external command,

operable program or batch file.

C:\Program Files\VMware\VMware vSphere CLI>dir

Volume in drive C has no label.

Volume Serial Number is 4802-7E84

Directory of C:\Program Files\VMware\VMware vSphere CLI

09/23/2010 06:50 AM <DIR> .

09/23/2010 06:50 AM <DIR> ..

09/23/2010 06:50 AM <DIR> bin

09/23/2010 07:03 AM <DIR> Perl

09/23/2010 06:49 AM <DIR> PPM

0 File(s) 0 bytes

5 Dir(s) 7,619,219,456 bytes free

C:\Program Files\VMware\VMware vSphere CLI>cd bin

C:\Program Files\VMware\VMware vSphere CLI\bin>

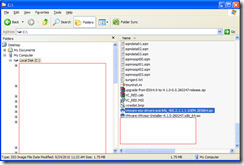

As shown in the above screenshot, the vihostupdate.pl script is actually in the C:\program files\VMware\VMware vSphere CLI\bin directory.

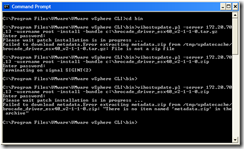

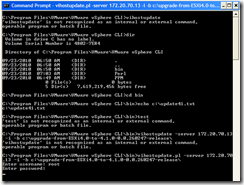

In the screenshot above, I actually made 2 mistakes, the first one being running vihostupdate without the .pl extension.

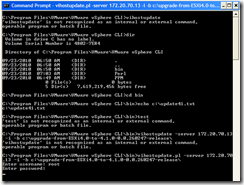

The 2nd mistake is shown in the screenshot below:

I originally unzipped the package because I thought executing the vihostupdate.pl was supposed to be done on a directory when in fact it actually expects a zip package. The following is the output and I’ve also highlighted the error if you were to specify a directory:

C:\Program Files\VMware\VMware vSphere CLI>vihostupdate

'vihostupdate' is not recognized as an internal or external command,

operable program or batch file.

C:\Program Files\VMware\VMware vSphere CLI>dir

Volume in drive C has no label.

Volume Serial Number is 4802-7E84

Directory of C:\Program Files\VMware\VMware vSphere CLI

09/23/2010 06:50 AM <DIR> .

09/23/2010 06:50 AM <DIR> ..

09/23/2010 06:50 AM <DIR> bin

09/23/2010 07:03 AM <DIR> Perl

09/23/2010 06:49 AM <DIR> PPM

0 File(s) 0 bytes

5 Dir(s) 7,619,219,456 bytes free

C:\Program Files\VMware\VMware vSphere CLI>cd bin

C:\Program Files\VMware\VMware vSphere CLI\bin>vihostupdate -server 172.20.70.13

-i -b c:\upgrade-from-ESXi4.0-to-4.1.0-0.0.260247-release\

'vihostupdate' is not recognized as an internal or external command,

operable program or batch file.

C:\Program Files\VMware\VMware vSphere CLI\bin>vihostupdate.pl -server 172.20.70

.13 -i -b c:\upgrade-from-ESXi4.0-to-4.1.0-0.0.260247-release\

Enter username: root

Enter password:

Please wait patch installation is in progress ...

Invalid bundle ZIP archive, or missing metadata.zip inside.Bundle.zip [/tmp/updatecache/upgrade-from-ESXi4.0-to-4.1.0-0.0.260247-release]: File /tmp/updatecache/upgrade-from-ESXi4.0-to-4.1.0-0.0.260247-release is too small to be a .zip file

C:\Program Files\VMware\VMware vSphere CLI\bin>vihostupdate.pl -server 172.20.70

.13 -i -b c:\upgrade-from-ESXi4.0-to-4.1.0-0.0.260247-release.zip

Once I specified the zip package instead, the update proceeds and completes properly:

C:\Program Files\VMware\VMware vSphere CLI\bin>vihostupdate.pl -server 172.20.70

.13 -i -b c:\upgrade-from-ESXi4.0-to-4.1.0-0.0.260247-release.zip

Enter username: root

Enter password:

Please wait patch installation is in progress ...

The update completed successfully, but the system needs to be rebooted for the changes to be effective.

C:\Program Files\VMware\VMware vSphere CLI\bin>

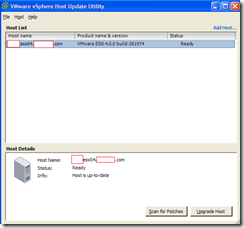

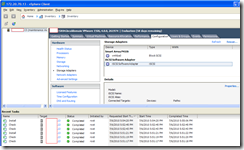

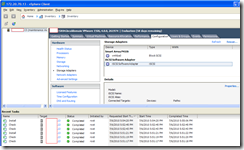

Reviewing what is displayed in the vSphere Client, we can see that the version is still 4.0.0 so all we need to do now is to reboot the server.

Note: Notice the Recent tasks below? Those are from the host update utility’s scans.

Once the host is successfully updated, you’ll see the correct version:

----------------------------------------------------------------------------------------------------------------------------------

Thoughts: Coming from a Windows background, I personally don’t like to do upgrades and I was told by my colleague that our practice lead recommends simply reinstalling ESXi on the host. The problem I have with that is that you lose all your settings so if you have a lot of hosts, this option might be a better route to take.

I hope this has been beneficial to the other professionals out there and possibly even save them some time.